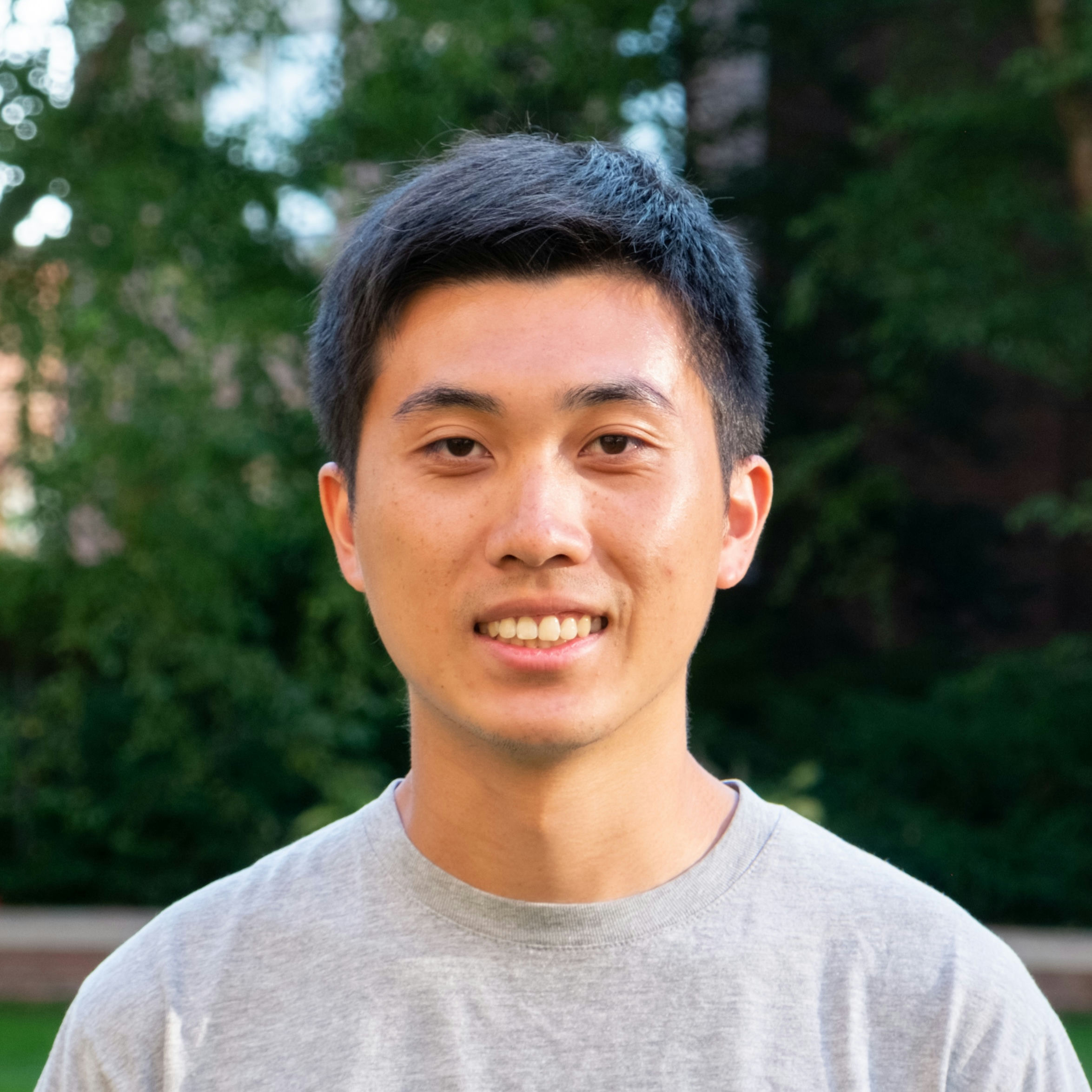

Artem Lukoianov is a Ph.D. candidate specializing in computer vision and graphics. His research focuses on diffusion models, with prior work exploring the distributions learned by image generative models and their applications to 3D shape generation.

Poster Abstract: In this work we develop an analytical framework to demystify the capabilities and limitations of modern diffusion-based image generative models. Although these models have achieved impressive results, their inner workings remain opaque due to their black-box nature, which hampers their reliability and ethical deployment in industry applications. Our work addresses this gap by leveraging a closed-form analytical model that gives the optimal solution to the training objectives under constraints imposed by differing architectural choices.

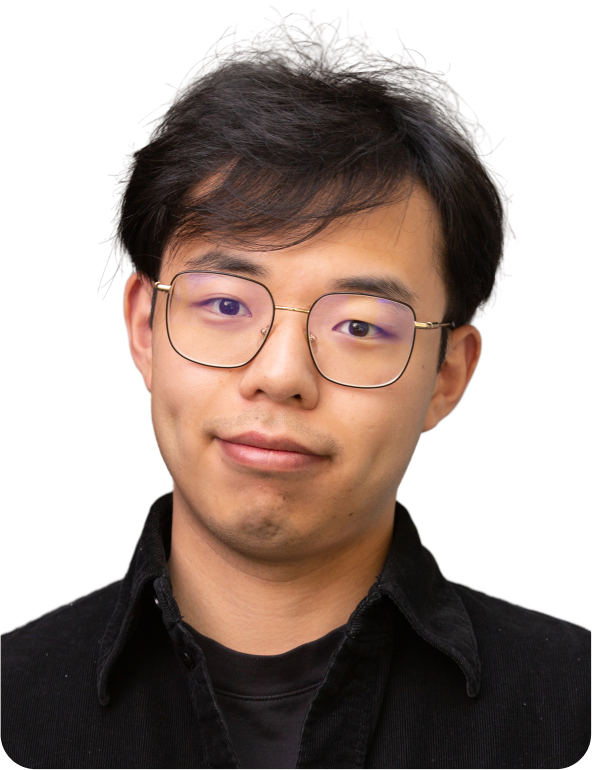

Peter Chen is a third-year PhD student at MIT CSAIL, co-advised by Principal Research Scientist Mike Cafarella and Adjunct Professor Mike Stonebraker. Chen is interested in improving the performance and efficiency of LLMs in the context of information retrieval and complex reasoning.

Poster Abstract: Real-world open-domain questions can be complex, especially when answering them requires integrating information from multiple sources. Effectively identifying the necessary information involves aligning it with the available data and its organization. However, existing RAG solutions address the alignment problem in a limited manner. Using off-the-shelf LLMs for question decomposition lacks awareness of the available data and its structure, often resulting in suboptimal retrieval performance. Alternatively, iteratively generating follow-up queries and interacting with the data collection, as explored in agentic RAG approaches, shows potential but is often inefficient since each successive query depends on previous results rather than being guided by the overall organization of the available data. To address the alignment problem, we introduce an LLM-based retrieval method—ARM, designed to better align questions with the organization of the data collection. Instead of solely matching query utterance, ARM explores relationships among data objects, enabling a retrieve-all-at-once solution for complex queries. Experimental results demonstrate that ARM significantly outperforms existing RAG methods on various complex open-domain QA tasks across multiple modalities, achieving superior retrieval performance and downstream accuracy while significantly lowering monetary costs.

Courtney Golden is a second-year PhD student at MIT EECS in CSAIL, where she is co-advised by Professor Daniel Sanchez and Professor of the Practice Joel Emer. Golden’s research centers on computer architecture, with a focus on combining hardware and software techniques to improve performance and energy efficiency. She is interested in accelerating sparse and irregular applications, with an eye towards scalability. Currently, her work focuses on spatial architectures for graph algorithms and sparse tensor algebra. Prior to beginning her PhD, Golden received a B.S. in Electrical and Computer Engineering from Cornell University and interned at Amazon Web Services, Marvell Semiconductor, and GlobalFoundries. At MIT, she is a recipient of the Jacobs Presidential Fellowship and the National Science Foundation Graduate Research Fellowship.

Poster Abstract: Sparse matrix computations lie at the heart of many scientific computing and graph processing algorithms. On conventional systems, their irregular memory accesses and low arithmetic intensity create memory bandwidth bottlenecks. While distributed-SRAM architectures overcome this memory bottleneck and have been widely used for iterative sparse computations, existing systems suffer from either poor compute core performance or poor programmability across diverse applications. We propose combining the high-bandwidth local storage of distributed-SRAM systems with reconfigurable compute nodes to deliver both high performance and high programmability. Our exploration of large-scale reconfigurable computing systems uncovers challenges in data partitioning, which we solve with novel heuristic techniques. Our architecture and data partitioning techniques together achieve gmean 32.8x speedup over prior state-of-the-art across six different iterative sparse matrix workloads.

Edan Orzech is a 3rd year PhD student at MIT CSAIL with 3+ years of research experience in game theory and coding theory. He has expertise in mathematical research in game theory, and has demonstrated ability in developing mathematical models of interactions between agents and proving equilibrium properties and rational behavior properties of players in these models.

Poster Abstract: We consider zero-sum games in which players move between adjacent states, where in each pair of adjacent states one state dominates the other. The states in our game can represent positional advantages in physical conflict such as high ground or camouflage, or product characteristics that lend an advantage over competing sellers in a duopoly. We study the equilibria of the game as a function of the topological and geometric properties of the underlying graph. Our main result characterizes the expected payoff of both players starting from any initial position, under the assumption that the graph does not contain certain types of small cycles. This characterization leverages the block-cut tree of the graph, a construction that describes the topology of the biconnected components of the graph. We identify three natural types of (on-path) pure equilibria, and characterize when these equilibria exist under the above assumptions. On the geometric side, we show that strongly connected outerplanar graphs with undirected girth at least always support some of these types of on-path pure equilibria. Finally, we show that a data structure describing all pure equilibria can be efficiently computed for these games.

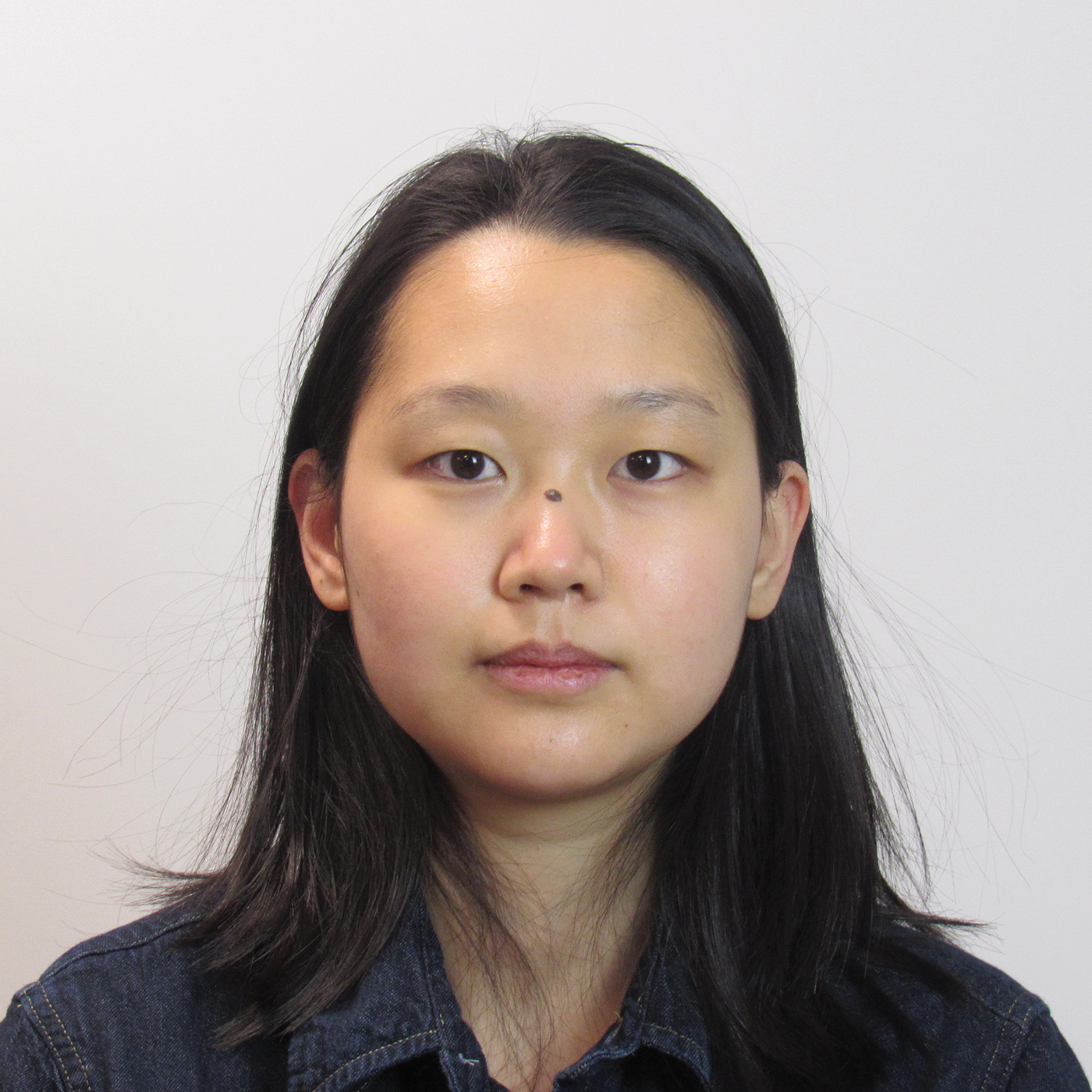

Eugenie Lai is a fourth-year PhD student in the Data Systems Group at MIT CSAIL, advised by Principal Research Scientist Michael Cafarella. Her research focuses on automating and optimizing data preparation and transformation pipelines, with the goal of making data more accessible and usable for a wide variety of data consumers, such as enterprise users. Her work spans topics such as data transformation prediction, explainable discretization, and agentic systems for data science workflows.

Poster Abstract: Business Intelligence (BI) plays a critical role in empowering modern enterprises to make informed data-driven decisions, and has grown into a billion-dollar business. Self-service BI tools like Power BI and Tableau have democratized the “dashboarding” phase of BI, by offering user-friendly, drag-and-drop interfaces that are tailored to non-technical enterprise users. However, despite these advances, we observe that the “data preparation” phase of BI continues to be a key pain point for BI users today.

Kimia Noorbakhsh is a PhD student in the Electrical Engineering and Computer Science department at MIT, where she is a member of CSAIL. She is co-advised by Associate Professor Mohammad Alizadeh and Professor Hari Balakrishnan in the Networking and Mobile Systems group. Her research focuses on general reasoning and self-improvement of Large Language Models (LLMs) and, more specifically, leveraging LLMs for System design.

Poster Abstract: Assessing and enhancing human learning through question-answering is vital, yet automating this process remains challenging. While large language models (LLMs) excel at summarization and query responses, their ability to generate meaningful questions for learners is underexplored.

Michael Gilbert is a third-year PhD student at the Energy-Efficient Multimedia Systems Group, co-advised by Professor Vivienne Sze and Professor of the Practice Joel Emer. His research focuses on energy-efficient accelerator design. His most recent projects explored how to efficiently utilize on-chip memory to reduce data movement, which consumes a significant amount of energy and may cause performance bottlenecks.

Poster Abstract: Latency and energy consumption are key metrics in the performance of deep neural network (DNN) accelerators. A significant factor contributing to latency and energy is data transfers. One method to reduce transfers or data is reusing data when multiple operations use the same data. Fused-layer accelerators reuse data across operations in different layers by retaining intermediate data in on-chip buffers, which has been shown to reduce energy consumption and latency. Moreover, the intermediate data is often tiled (i.e., broken into chunks) to reduce the on-chip buffer capacity required to reuse the data. Because on-chip buffer capacity is frequently more limited than computation units, fused-layer dataflow accelerators may also recompute certain parts of the intermediate data instead of retaining them in a buffer. Achieving efficient trade-offs between on-chip buffer capacity, off-chip transfers, and recomputation requires systematic exploration of the fused-layer dataflow design space. However, prior work only explored a subset of the design space, and more efficient designs are left unexplored.

Dr. Jehanzeb Mirza is a postdoc in the Spoken Language Systems group at MIT CSAIL, advised by Senior Research Scientist James Glass. His research focuses on multi-modal learning, particularly improving fine-grained understanding. He earned his PhD in Computer Science (specializing in computer vision) from TU Graz, Austria, under the supervision of Prof. Horst Bischof, and his Master’s from KIT, Germany.

Poster Abstract: We present GLOV, a framework that reduces the manual effort required to craft effective natural language prompts for vision-language models (VLMs). Instead of human intervention, GLOV employs large language models (LLMs) as implicit optimizers, iteratively refining VLM prompts by ranking and optimizing them based on task performance. Additionally, we guide the LLM’s generation by incorporating an embedding space steering vector during autoregressive generation, biasing it toward more effective prompts at each optimization step. We evaluate GLOV across multiple downstream tasks and VLM architectures, demonstrating its strong generalization ability.

Om Chabra is a PhD student at CSAIL. His research focuses on new system designs for disaster responses, both focusing on minimizing response time in detecting natural disasters and ensuring network connectivity during them. He previously graduated with his BS in Computer Science from UIUC.

Poster Abstract: We present a new city-scale decentralized mesh network system suited for disaster recovery and emergencies. When wide-area connectivity is unavailable or significantly degraded, our system, MapMesh, enables static access points and mobile devices equipped with Wi-Fi in a city to route packets via each other for intra-city connectivity and to/from any nodes that might have Internet access, e.g., via satellite. The chief contribution of our work is a new routing protocol that scales to millions of nodes, a significant improvement over prior work on wireless mesh and mobile ad hoc networks. Our approach uses detailed information about buildings from widely available maps—data that was unavailable at scale over a decade ago, but is widely available now—to compute paths in a scalable and robust way.

Pantea Karimi Babaahmadi is a Ph.D. candidate in the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT. She is a member of the Networking and Mobile Systems Group, advised by Associate Professor Mohammad Alizadeh and Professor Hari Balakrishnan. She is also a student researcher at the Networking research group in Microsoft Research. Babaahmadi is focused on designing, analyzing, and automatically mitigating heuristics used in Systems and Networking. Her work integrates verification and optimization tools to evaluate and improve heuristic performance, while also leveraging large language models (LLMs) to design more creative and adaptive heuristics. She is the recipient of Neekeyfar fellowship for demonstrating academic excellence and has received an M.S. in EECS from MIT in 2023 and a B.S. in Electrical Engineering at the Sharif University of Technology.

Poster Abstract: Assessing and enhancing human learning through question-answering is vital, yet automating this process remains challenging. While large language models (LLMs) excel at summarization and query responses, their ability to generate meaningful questions for learners is underexplored.

We propose Savaal, a scalable question-generation system with three objectives: (i) scalability, enabling question generation from hundreds of pages of text (ii) depth of understanding, producing questions beyond factual recall to test conceptual reasoning, and (iii) domain-independence, automatically generating questions across diverse knowledge areas. Instead of providing an LLM with large documents as context, Savaal improves results with a three-stage processing pipeline. Our evaluation with 76 human experts on 71 papers and PhD dissertations shows that Savaal generates questions that better test depth of understanding by 6.5X for dissertations and 1.5X for papers compared to a direct-prompting LLM baseline. Notably, as document length increases, Savaal's advantages in higher question quality and lower cost become more pronounced.

Pascal Passigan is an undergraduate in course 6, studying AI and decision making. He has experience developing novel deep learning methodologies, deploying web-based software in production, and coordinating with many contributors.

Poster Abstract: We present Mantis, an AI-human interactive platform for navigating complex scientific and enterprise knowledge landscapes. Leveraging multi-modal embeddings of proteins, chemicals, patients, documents, and organizational workflows, Mantis enables intuitive, visual, and agentic interactions with AI-generated latent spaces. This tool facilitates scientific discovery, enhances enterprise decision-making, and opens avenues for human-guided AI in both research and business environments.

Dr. Riley J. Mangan is a computational biologist whose interests span regulatory epigenomics, comparative genomics, and precision medicine. He is a postdoctoral fellow in the MIT Computational Biology Group, part of the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT. He is also a postdoctoral scholar at the Broad Institute of MIT and Harvard and a t32-funded research fellow in the Genetics Training Program at Harvard Medical School. He holds a PhD in Molecular Genetics and Microbiology from Duke University.

Poster Abstract: Psychiatric disorders impose significant public health burdens, yet their highly polygenic nature and variable treatment response among individual patients complicates effective treatments. We aim to address these challenges by identifying the molecular components that define patient subtypes and leveraging these data for predictive models of treatment response through a pathway-centric approach integrating single-cell multiomics, genetics, and electronic health records (EHR). To this end, we dissect the shared and disorder-specific molecular components of psychiatric disorders by integrating single-cell RNA sequencing and clinical phenotyping data gathered from 72 individuals with schizophrenia or bipolar disorder. We identify shared and disorder-distinct differential gene expression and cell-type-specific gene co-expression modules. Using our novel Cell-Projected Phenotypes methodology, we also identify disorder-enriched transcriptional cell states. From here, we will present our 'network convergence' model, which relates genetic variants, including both common/rare and coding/noncoding variants, to characterize the molecular mechanisms of pathway dysregulation in psychiatric disorders, employing deep learning models and evolutionary signatures to predict regulatory effects and mutational impacts on protein structure. Finally, we build interpretable multimodal predictive models of antidepressant treatment response informed by both patient-specific pathway dysregulation profiles imputed from patient genotypes, and EHR-derived clinical phenotypic features. We aim to produce the most comprehensive molecular map to date of the shared and disorder-specific molecular components of psychiatric disorders to enable precision, mechanistic and genetics-informed predictive models of personalized treatment response.

Robert Calef is a PhD student in Electrical Engineering and Computer Science at MIT, advised by Professor Manolis Kellis and Harvard Associate Professor Marinka Zitnik. His research focuses on machine learning for biological discovery, specifically protein representation learning and multimodal language models combining text and protein data. He earned his BSc in Bioinformatics with a minor in Mathematics from UC Santa Cruz, graduating summa cum laude. From 2015 to 2023, Calef held various roles in industry, most recently as a Staff Machine Learning Scientist at GRAIL, Inc. where he developed machine learning models for the Galleri cancer test. At Dovetail Genomics, he co-developed HiRise, a genome assembly pipeline. His research has been published in Cancer Cell, Annals of Oncology, and Genome Research, and he holds several patents in cancer detection.

Poster Abstract: Understanding the roles of human proteins remains a major challenge, with approximately 20% of human proteins lacking known functions and more than 40% missing context-specific functional insights. Even well-annotated proteins are often poorly characterized in diverse biological contexts, disease states, and perturbations. We present ProCyon, a foundation model for modeling, generating, and predicting protein phenotypes across five interrelated knowledge domains: molecular functions, therapeutic mechanisms, disease associations, functional protein domains, and molecular interactions. To support this, we created ProCyon-Instruct, a dataset of 33 million protein phenotype instructions, representing a comprehensive resource for multiscale protein phenotypes. By co-training a large language model with multimodal molecular encoders, ProCyon integrates phenotypic and protein data. A novel architecture and instruction tuning strategy allow ProCyon to process arbitrarily interleaved protein-and-phenotype inputs, achieve zero-shot task transfer, and generate free-form text phenotypes interleaved with retrieved protein sequence, structure, and drug modalities in a single unified model. ProCyon achieves strong performance against single-modality models, multimodal models such as ESM3, as well as text-only LLMs on dozens of benchmarking tasks such as contextual protein retrieval and question answering. We extensively evaluate ProCyon for biological applications, including identifying protein domains that bind small molecule drugs, predicting peptide binding with enzymes, and assessing the functional impact of Alzheimer's disease mutations. ProCyon enables conditional retrieval of proteins linked to small molecules through complementary mechanisms of action. It generates candidate phenotypes for under-characterized proteins recently implicated in Parkinson's disease, facilitating hypothesis generation for poorly understood proteins and biological processes. ProCyon paves the way toward an effective, general solution for functional protein biology that can enable new insights into the human proteome.

Saathvik Kannan is a first-year undergraduate student at MIT majoring in Computer Science and Molecular Biology. He is passionate about developing computational platforms towards understanding protein structure and accelerating drug development. These include DrugGen, a graph-based neural network approach for discovering precision medicine inhibitors, and BioPLEX, a platform for predicting protein complex structures and their mutations. Kannan's work has earned him several accolades, including the Regeneron Young Scientist Award and first place at the Junior Science and Humanities Symposium in Biomedical Sciences. Moreover, Kannan has nine first-author publications and one pending US patent for the development of a novel drug.

Poster Abstract: AI is poised to have a dramatic impact on the human condition, and perhaps no area will be more long-lasting than improvements in human health and longevity, through deeper understanding of cellular states, protein structure and function, molecular circuitry, and new therapeutics to modulate them and reverse human disease. This is also an area where human creativity needs dramatic assistance, as human intuition evolved for very different scales, and is—without assistance—ill-matched to the extremely small, intricate, diverse, foreign, and vast scale of chemical structures, protein folds, cellular circuits, disease etiologies, and the resulting diversity in patient molecular, cellular, and phenotypic states. (1) To address this challenge, we leverage joint latent space embeddings of chemical structure and function across both small molecule drugs and target proteins. We have created chemically- and functionally-interpretable drug landscapes that enable generation of novel molecules, refinement of existing molecules, human-guided design of new molecules, and function-driven improvements. (2) To enrich the chemical landscape with human-interpretable natural language annotations, we have also co-embedded drug patents, along with the chemical structures described within them, revealing important structure-function relationships crucial for drug discovery, providing an integrated drug development environment for model-driven drug discovery. (3) We have also created the ability to generate ‘missing’ molecules that interpolate empty spaces in the latent embeddings, thus enabling users to generate and in silico test specific regions of the chemical landscape at much higher resolution, using “outbeddings”, that generate candidate molecules directly from embedding coordinates for drug optimization. (4) Further, to align chemical landscapes to their target proteins and enable structural optimization and drug development tasks, we built a state-of-the-art binding affinity prediction model, AffinityNet-8, which leverages foundation protein language models, protein domain information, patent documents, and structural descriptors to predict affinity and produce a joint embedding of various modalities. AffinityNet-8 achieves top-ranked performance on the TDCommons drug-target interaction benchmark, surpassing all previous methods. This model serves as the backbone for our latent space embeddings. (5) To engage human creators and their unique creativity, we have developed Mantis, a cognitive cartography workbench, which allows users to navigate a co-embedding of the human proteome and large-scale compound libraries, with millisecond responsiveness. (6) Lastly, leveraging the human-AI interaction paradigm, we have created agentic workflows that enable further molecule optimization iterations through an iterative reinforcement learning framework and task-specific fine-tuning. Overall, our combined approach enables next-generation AI-powered drug generation with human guidance and refinement.

Shannon Shen is a 3rd year PhD student at MIT, advised by Professor David Sontag. His research lies at the intersection between NLP and HCI. Right now, Shen focuses on human and LLM agent collaboration for scientific discovery and methods to support human verification of LLM generations. He also developed impactful software for document-parsing that has been downloaded more than 3 million times, and won best paper awards at EMNLP and ACL.

Poster Abstract: Agents powered by large language models have been increasingly used to support end users in scenarios like code generation or data analysis.

In such intelligence augmentation tasks, it often requires multi-turn long-form interactions for both humans and agents to cooperate to achieve the end task.

As these agents are often evaluated in static, output-only setups, they do not capture the complex dynamics in the human and agent collaboration like grounding and feedback.

In this work, we present a framework to assess agent in these collaborative settings, emphasizing how agent helpfulness scales with human effort spent in the collaboration process.

Tanner Andrulis received B.S. degrees in Computer Engineering and Math from Purdue University in 2021. He is currently pursuing his Ph.D. degree under the supervision of Professor Vivienne Sze and Professor of the Practice Joel Emer. His research focuses on the design and modeling of tensor accelerators, especially emerging analog and processing-in-memory architectures. Tanner was a recipient of the MIT Presidential Fellowship in 2021.

Poster Abstract: Compute-In-Memory (CiM) is a promising solution to accelerate Deep Neural Networks (DNNs) as it can avoid energy-intensive DNN weight movement and use memory arrays to perform low-energy, high-density computations. These benefits have inspired research across the CiM stack, but CiM research often focuses on only one level of the stack (i.e., devices, circuits, architecture, workload, or mapping) or only one design point (e.g., one fabricated chip). There is a need for a full-stack modeling tool to evaluate design decisions in the context of full systems (e.g., see how a circuit impacts system energy) and to perform rapid early -stage exploration of the CiM co-design space. To address this need, we propose CiMLoop: an open-source tool to model diverse CiM systems and explore decisions across the CiM stack. CiMLoop introduces (1) a flexible specification that lets users describe, model, and map workloads to both circuits and architecture, (2) an accurate energy model that captures the interaction between DNN operand values, hardware data representations, and analog/digital values propagated by circuits, and (3) a fast statistical model that can explore the design space orders-of-magnitude more quickly than other high-accuracy models. Using CiMLoop, researchers can evaluate design choices at different levels of the CiM stack, co-design across all levels, fairly compare different implementations, and rapidly explore the design space.

Xingran (Maggie) Du is a third-year PhD student co-advised by Professor Daniel Sanchez and Professor of the PracticeJoel Emer. She is interested in exploiting sparsity in new ways to accelerate conventionally dense computation. In her previous project, she designed an accelerator for automata processing.

Poster Abstract: Automata processing has wide applications in network intrusion detection, bioinformatics, and machine learning. Automata graphs are often sparse due to the inherent structure of the patterns they represent, having 1~2 neighbors per graph node on average. Exploiting sparsity using compressed data representations can be challenging, because as we gain storage efficiency, we may lose access throughput. We design an accelerator that exploits sparsity to get good throughput per area, by combining compressed and uncompressed data representations. We evaluate our system on the AutomataZoo benchmark suite and achieve 2.5× and 2.2× speedup iso-area over state-of-the-art accelerators.

Yunyi Shen is a 3rd year PhD student in MIT EECS with Associate Professor Tamara Broderick. He has completed masters' degrees in computer science (MIT) as well as statistics and wildlife ecology (UW-Madison). He works on developing novel machine learning methodologies for scientific applications—especially biology, ecology, and physics. His current work innovates in generative AI, optimal transport, distributional times series, and simulation-based inference.

Poster Abstract: Practitioners often aim to infer an unobserved population trajectory using sample snapshots at multiple time points. E.g. given single-cell sequencing data, scientists would like to learn how gene expression changes over a cell’s life cycle. But sequencing any cell destroys that cell.

So we can access data for any particular cell only at a single time point, but we have data across many cells. The deep learning community has recently explored using Schrödinger bridges (SBs) and their extensions in similar settings. However, existing methods either (1) interpolate between just two time points or (2) require a single fixed reference dynamic (often set to Brownian motion within SBs). But learning piecewise from adjacent time points can fail to capture long-term dependencies. And practitioners are typically able to specify a model family for the reference dynamic but not the exact values of the parameters within it. So we propose a new method that (1) learns the unobserved trajectories from sample snapshots across multiple time points and (2) requires specification only of a family of reference dynamics, not a single fixed one. We demonstrate the advantages of our method on simulated and real data.

Zi Yu Xue (Fisher) is a 3rd-year PhD student at MIT working with Professor Vivienne Sze and Professor of the Practice Joel S. Emer. His research focuses on energy-efficient hardware accelerators for sparse data.

Poster Abstract: Sparse tensor algebra is a challenging class of workloads to accelerate due to low arithmetic intensity and varying sparsity patterns. Prior sparse tensor algebra accelerators have explored tiling sparse data to increase exploitable data reuse and improve throughput, but typically allocate tile size in a given buffer for the worst-case data occupancy. This severely limits the utilization of available memory resources and reduces data reuse. Other accelerators employ complex tiling during preprocessing or at runtime to determine the exact tile size based on its occupancy. We propose a speculative tensor tiling approach, called overbooking, to improve buffer utilization by taking advantage of the distribution of nonzero elements in sparse tensors to construct larger tiles with greater data reuse. To ensure correctness, we propose a low-overhead hardware mechanism, Tailors, that can tolerate data overflow by design while ensuring reasonable data reuse. We demonstrate that Tailors can be easily integrated into the memory hierarchy of an existing sparse tensor algebra accelerator. To ensure high buffer utilization with minimal tiling overhead, we introduce a statistical approach, Swiftiles, to pick a tile size so that tiles usually fit within the buffer's capacity, but can potentially overflow, i.e., it overbooks the buffers. Across a suite of 22 sparse tensor algebra workloads, we show that our proposed overbooking strategy introduces an average speedup of 52.7× and 2.3× and an average energy reduction of 22.5× and 2.5× over ExTensor without and with optimized tiling, respectively.

After attending Carnegie Mellon University for a Bachelor degree in computer science and working at OtterTune, a start-up for database optimization, Ziyu Zhang started her doctoral studies at MIT CSAIL in 2023, working with Principal Research Scientist Michael Cafarella and Associate Professor Julian Shun. She is interested in developing algorithms to tackle large-scale, semantically complex data management problems. Currently, she is actively working on vector search algorithms and co-designing them with AI-powered data systems.

Poster Abstract: Question-Answering (QA) is a long standing ressearch area with many potential applications, such as web search augmentation, customer service, business insight discovery, and education assistance. Large-language models (LLMs) and related knowledge base management methods have brought about significant breakthroughs in open domain and textual knowledge based QA. However, there has been no apparent robust solutions when the dataset the questions are based on is multimodal and may contain both structured and unstructured data. We propose Caravaggio, a framework for integrating such a dataset to accurately answer user questions based on it. We explore methods including performing query-specific featurization on the dataset, improved multi-modal aligned retrieval, and integrating the data based on feature relations within the framework. We present preliminary results on three diverse multimodal datasets.