Research

Ever had an idea for something that looked cool, but wouldn’t work well in practice? When it comes to designing things like decor and personal accessories, generative artificial intelligence (genAI) models can relate. They can produce creative and elaborate 3D designs, but when you try to fabricate such blueprints into real-world objects, they usually don’t sustain everyday use.

A firm that wants to use a large language model (LLM) to summarize sales reports or triage customer inquiries can choose between hundreds of unique LLMs with dozens of model variations, each with slightly different performance.

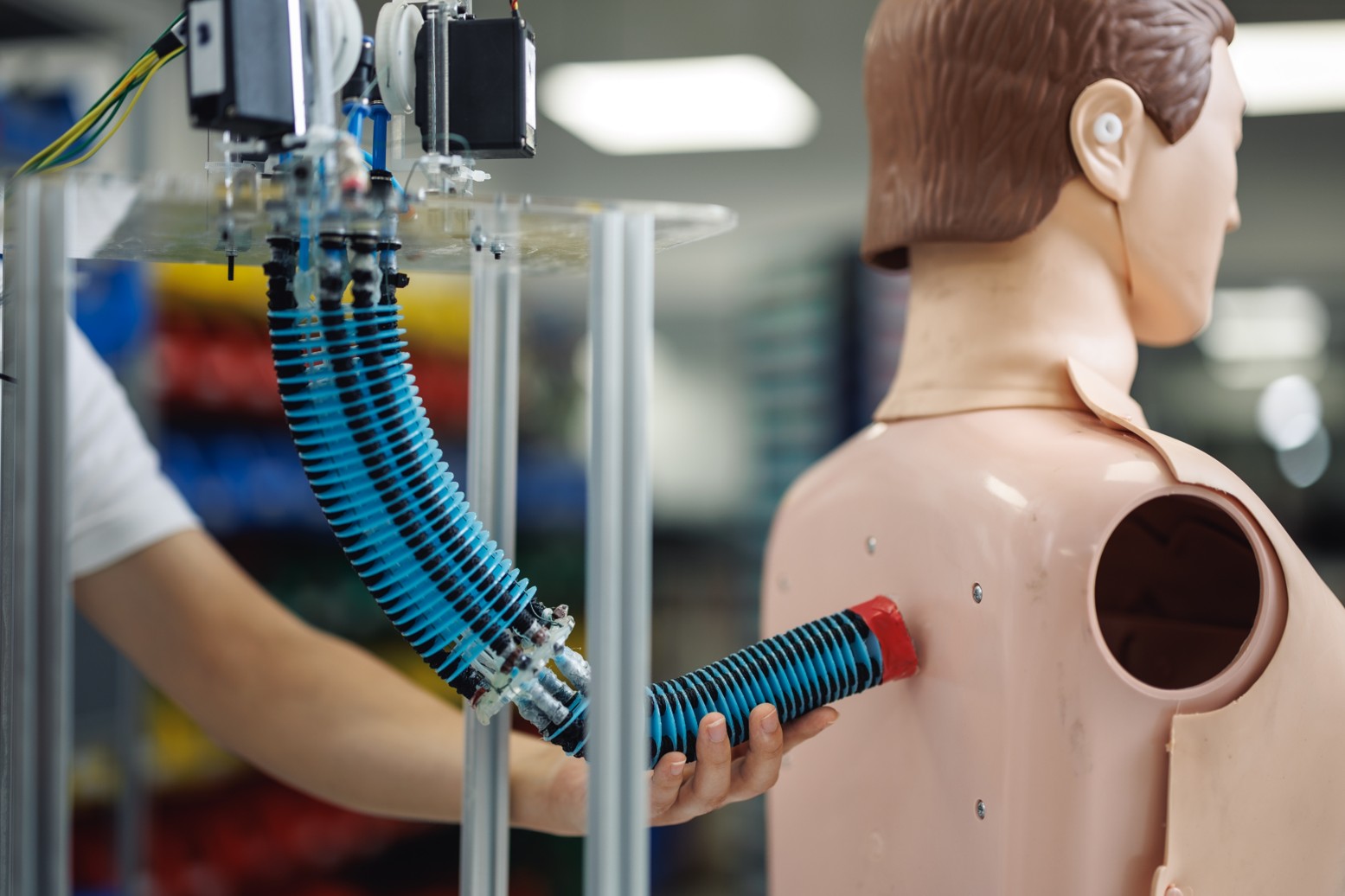

Singapore-MIT Alliance for Research and Technology’s (SMART) Mens, Manus & Machina (M3S) interdisciplinary research group, and National University of Singapore (NUS), alongside collaborators from Massachusetts Institute of Technology (MIT) and Nanyang Technological University (NTU Singapore), have developed an AI control system that enables soft robotic arms to learn a wide repertoire of motions and tasks once, then adjust to new scenarios on the fly without needing retraining or sacrificing functionality. This breakthrough brings soft robotics closer to human-like adaptability for real-world applications, such as in assistive robotics, rehabilitation robots, and wearable or medical soft robots, by making them more intelligent, versatile and safe.