Research

Most languages use word position and sentence structure to extract meaning. For example, “The cat sat on the box,” is not the same as “The box was on the cat.” Over a long text, like a financial document or a novel, the syntax of these words likely evolves.

To innovate as a technologist, you need to be a polyglot—fluent in multiple languages of problem-solving, able to synthesize ideas across domains, reframing puzzles to visualize different outcomes, and revealing the questions that have yet to be asked.

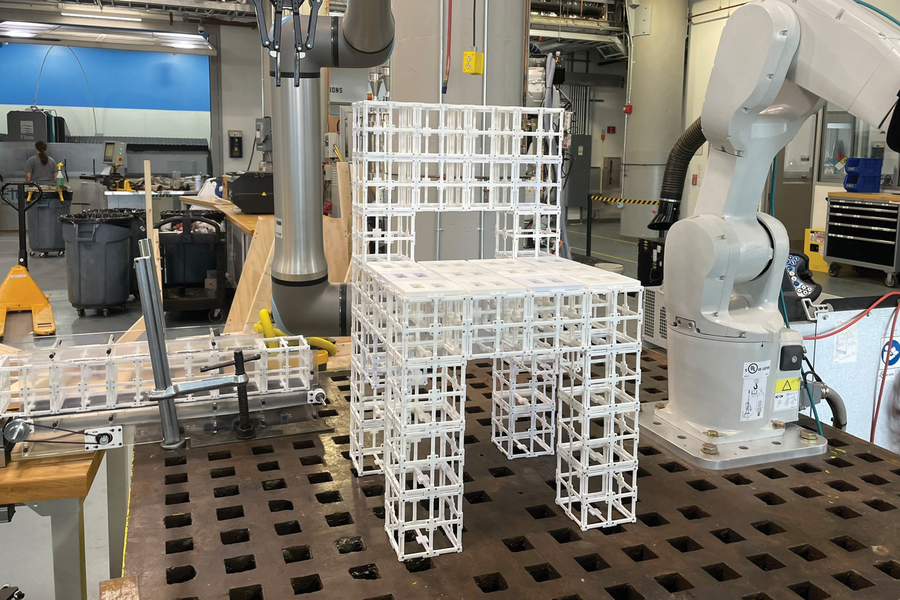

Computer-aided design (CAD) systems are tried-and-true tools used to design many of the physical objects we use each day. But CAD software requires extensive expertise to master, and many tools incorporate such a high level of detail they don’t lend themselves to brainstorming or rapid prototyping.